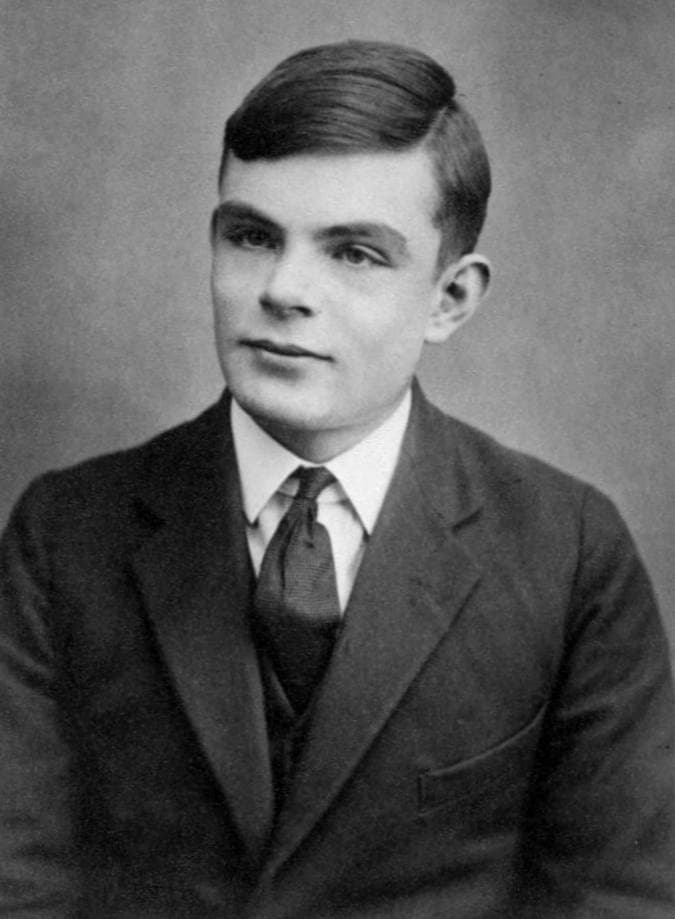

Alan Turing

2023-01-18

4 min read

Alan Turing

2023-01-18

4 min read

Building a Generatively Pretrained Transformer (GPT) with the Help of GPT

In this Youtube video by Andrej, you can now learn how to build your version of ChatGPT from scratch. In a how-to build GPT video recently released, he shows how he builds a Generatively Pretrained Transformer using the framework described in the seminal paper, ”Attention is All You Need”. This paper, published in 2017, was revolutionary in its proposal of a Transformer based on attention mechanisms. With this new and improved architecture, the need for recurrence and convolutions no longer exists.

It’s quite a fun, educative video, and the creator does his best to carry viewers along. To better understand the video, you should watch this ML video Playlist on autoregressive language modeling framework and basics of tensors and PyTorch neural networks. The video assumes that most viewers have a firm grasp of this.

What is the Underpinning GPT Framework- How does GPT Work?

All you need to know is that GPT comprises a language prediction model. Essentially, it has a neural network that takes human-readable text as input. In previous models, convolution would be carried out to transform the data and then provide a prediction. However, with GPT, the Transformer (which uses the attention mechanism) does the job of transformation. A large amount of data went into training and fine-tuning the model. There’s more to it, but this is the basics.

Can You Re-Create ChatGPT?

Technically, you can. The tools and knowledge required to create it are available for free online. However, the training data and level of fine-tuning are not at your disposal. Unless you’re a billion-dollar company like OpenAI, in that case, we love you. Keep up the excellent work.

With this in mind, the project uses a smaller dataset-all of Shakespeare’s work. This dataset amounts to 1MB of data and is a reasonable size for the purpose of demonstration.

Summary

The nano GPT is a repository on GitHub for training Transformers. This repo is available for you to download and use personally. Even though the project uses Shakespeare’s data, you can provide any desired dataset. The only restriction is that this training data must be in text format. Also, the repo contains 300 lines of code- brevity is not a lost cause.

- The Transformer was trained, and a loss of 4.1217 was achieved. This loss is the value of the cost function of the training data.

- Currently, this is a basic model that is being converted to a script with hyperparameters. This will further simplify the final product.

- Matrix multiplication and torch functions were relied upon to create a BxTxC matrix. This matrix takes the average of all preceding elements, thereby allowing the tokens to communicate.

- Going further, self-attention is used to create data-dependent affinities between tokens in a sequence. To do this, all that is needed is to take the dot product of the query and the key vectors.

- By multiplying the query, key, and value vectors, attention is implemented by softmaxing and aggregating the values. It is important to note that scaling attention is important to prevent softmax from converging to one-hot vectors.

- The project implemented scaled attention, multi-headed self-attention, feed-forward computation, skip connections, and a projection layer to optimize deep neural networks and get a validation loss of 2.08.

- In addition, Transformers were trained with Layer Norm and Dropout to generate text and summarize documents.

Skills and Knowledge Required to Create Your Version of GPT

You don’t need to be an Einstein to follow along with this project. Here are the skills and knowledge you need.

- Basic knowledge of statistics

- Basic knowledge of calculus

- Basic familiarity with Python

Conclusion

And that’s all for now! Hopefully this gives you all you need to go ahead and build the next best product in the world of AI. Here are other fun activities you can try.

- Train the GPT you just built using your own dataset of choice. It’ll be interesting to see what you come up with.

- Use a dataset that is extremely large, large enough to minimize the gap between train and val loss. Pretrain the transformer on this data, then initialize with that model and finetune it on another smaller data with a smaller number of steps and lower learning rate. Then, try to lower validation loss by the use of pretraining. Is it possible?

- Try to include one additional feature that users might enjoy and measure the impact on the performance of your GPT?